“When the structure is sound, movement becomes effortless.”

Most people expect security foundations and governance to be boring. Policy documents. Checklists. Frameworks. Meetings.

AWS, and seasoned security architects, know better.

Security Foundations and Governance are not about control. They are about alignment.

They are what allow everything else, detection, response, infrastructure, identity, and data protection, to function without friction. This is why Domain 6 exists. And why it quietly determines whether every other domain succeeds or fails.

1. What AWS Means by “Security Foundations”

AWS does not treat security foundations as a product or a service. They treat them as operating conditions.

Security foundations answer questions like:

• Who is responsible for what?

• How are decisions made?

• How do we know when something is “secure enough”?

• How do we scale security without slowing delivery?

In AWS terms, foundations are built on:

• Shared Responsibility

• Well-Architected principles

• Standardized controls

• Continuous improvement

• Clear ownership

If those are missing, everything else becomes reactive.

Key Takeaway: On the exam and in real life, assume security foundations are always present, not optional. If a question describes a scenario with ambiguous responsibility, pause and seek alignment before acting.

2. The Shared Responsibility Model: The First Gate

Every AWS security exam, especially the Security Specialty, tests one thing relentlessly: Do you understand what AWS secures…and what you must secure yourself?

AWS is responsible for:

• Physical data centers

• Underlying hardware

• The cloud infrastructure itself

You are responsible for:

• Identity and access

• Network controls

• Data protection

• OS and application security

• Configuration

Governance begins the moment you clearly accept that responsibility.

Most real-world failures, and many exam traps, happen when responsibility is blurred.

3. Governance Is How You Scale Trust

Governance is not about saying “no.” It’s about creating guardrails so teams can move quickly without breaking things.

AWS governance relies on:

• AWS Organizations

• Service Control Policies (SCPs)

• Account separation

• Tagging standards

• Centralized logging and monitoring

• Defined escalation paths

Exam cue: If AWS wants you to prevent risky behavior without managing individual permissions, the answer is almost always SCPs.

Governance operates above IAM, not instead of it.

4. Well-Architected Security Pillar: The Quiet Backbone

The AWS Well-Architected Framework is foundational to this domain.

The Security Pillar emphasizes:

• Strong identity foundations

• Traceability

• Infrastructure protection

• Data protection

• Incident response

You’ve already studied all of these.

Domain 6 exists to show how they fit together.

AWS wants you to think:

• Holistically

• Long-term

• With trade-offs in mind

On the exam, this shows up as:

• “Which solution is the most scalable?”

• “Which approach reduces operational overhead?”

• “Which option aligns with AWS best practices?”

Governance favors simplicity, repeatability, and clarity.

5. Policies, Standards, and Automation

In AWS, policy without automation is aspirational. Automation without policy is dangerous.

Strong governance includes:

• Infrastructure as Code (CloudFormation, Terraform)

• Automated security checks

• Preventive controls (SCPs, Config rules)

• Detective controls (GuardDuty, Security Hub)

• Corrective actions (Lambda-based remediation)

Exam cue: If the question says, “ensure compliance continuously”, the answer involves automation, not manual review. Governance is what turns security into a system, not a on-going project.

Top 3 Exam Gotchas: Domain 6

- Over-relying on IAM and neglecting the power of Service Control Policies (SCPs) for organization-wide governance.

- Focusing on manual reviews instead of leveraging automation for continuous compliance.3. Choosing the most restrictive answer on the exam rather than the one that balances security, cost, and operational impact.

- Key Takeaway: The “safe” answer is not always the correct one—look for governance and automation at scale.

6. Risk Management: Choosing, Not Eliminating

AWS does not expect you to eliminate all risk.

They expect you to:

• Identify it

• Understand it

• Accept, mitigate, or transfer it intentionally

This is why governance includes:

• Risk registers

• Compliance mappings

• Business context

• Cost-awareness

On the exam:

The “best” answer is rarely the most restrictive one. It is the one that balances security, cost, and operational impact.

Scenario Example: Rapid Growth, Real Governance

In 2024, a fintech company went from 10 to 60 AWS accounts in under six months. Security needed to prevent resource creation outside of approved regions and enable GuardDuty everywhere automatically.

Best Approach: The team used AWS Organizations to apply SCPs for region lockdown, combined with automated account bootstrapping scripts that enabled GuardDuty by default. This solution leveraged automation and organizational guardrails—demonstrating mature, real-world AWS security thinking.

Key Takeaway: AWS rewards answers that use policy-driven, automated, and scalable solutions, exactly as in this scenario.

7. The Martial Parallel: Structure Enables Freedom

In martial arts, beginners see rules as limitations.

Advanced practitioners see them as:

• Stability

• Efficiency

• Freedom under pressure and much more

A strong stance doesn’t restrict movement; it enables it. Security foundations work the same way.

When governance is clear:

• Teams move faster

• Incidents resolve cleaner

• Mistakes are contained

• Learning compounds

When governance is weak:

• Everything feels urgent

• Security becomes adversarial

• Teams work around controls instead of with them

8. Exam Patterns for Domain 6

Here’s how AWS tests this domain:

• Account-level controls → AWS Organizations + SCPs

• Preventing risky actions globally → SCPs

• Balancing speed and security → Guardrails, not micromanagement

• Scaling security → Automation and standardization

• Aligning with best practices → Well-Architected Framework

If the question asks:

“Which solution is easiest to manage at scale?”

Exam cue: Choose the centralized, automated, policy-driven option.

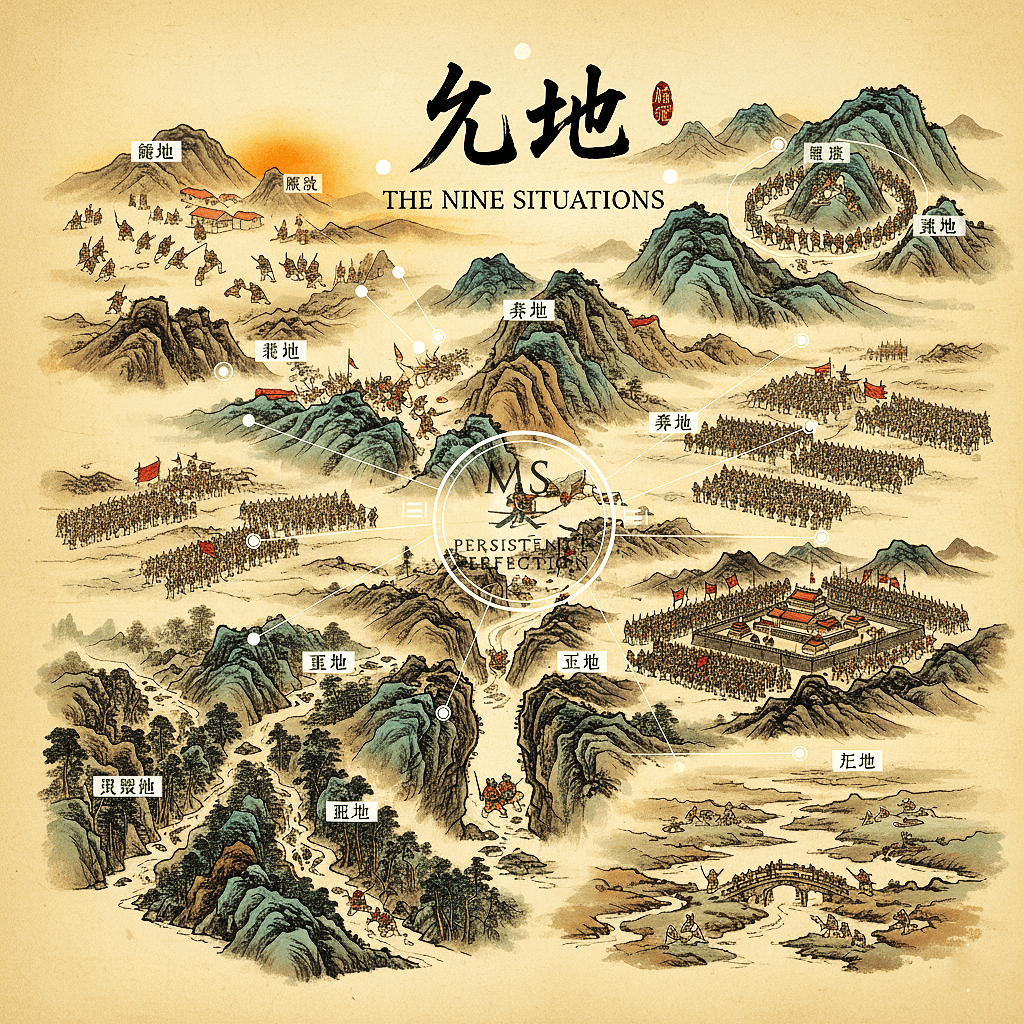

Final Capstone: The Six Domains as One System

Let’s put it all together.

Domain 1 — Detection

See clearly. You can’t secure what you can’t observe.

Detection creates awareness and prevents surprise.

Domain 2 — Incident Response

Move decisively without panic. Preparation and clarity turn chaos into choreography.

Domain 3 — Infrastructure Security

Shape the terrain. Segmentation, isolation, and least exposure reduce blast radius before attacks happen.

Domain 4 — Identity and Access Management

Decide who can act. Identity is the new perimeter. Precision here determines everything else.

Domain 5 — Data Protection

Guard what truly matters. Encryption, key management, and lifecycle controls protect the mission itself.

Domain 6 — Security Foundations and Governance

Hold the line without rigidity. Governance aligns people, process, and technology into a system that scales.

The Quiet Truth at the Center of AWS Security

AWS security is not about fear.

It is not about heroics.

It is not about locking everything down.

It is about clarity, balance, and intention.

The exam rewards those who:

• Pause before reacting

• Think in systems, not silos

• Choose scalable solutions

• Respect trade-offs

• Trust structure over force

That’s Zen. That’s architectural mastery. You’re ready.

When you sit for the exam, remember:

Awareness first.

Structure second.

Action last.

Everything else follows naturally.

Verification & Citations Framework | “Leave No Doubt”

Primary AWS Sources to Reference:

• AWS Shared Responsibility Model

• AWS Well-Architected Framework (Security Pillar)

• AWS Organizations Documentation

• Service Control Policies (SCPs)

• AWS Security Best Practices Whitepaper

• AWS Security Specialty Exam Guide (Domain 6)

Verification Boxes (Suggested Placement):

• After Shared Responsibility section

• After SCPs / Governance section

• After Well-Architected references

Quick Reference Checklist: Domain 6 – Security Foundations & Governance

Key Takeaways (Scan before the exam!)

– Shared Responsibility Model: Always clarify what AWS secures vs. what you control.

– Use AWS Organizations and SCPs for policy-driven, organization-wide governance.

– Automate compliance: favor Infrastructure as Code, automated checks, and auto-enablement of detective/preventive controls.

-Lean one the AWS Well-Architected Framework forbest practice alignment.

– Favore scalable, centralized, and policy-drive solutionsy in exam scenarios.- Always check the latest AWS documentation—services and features evolve quickly.

Final Tip: For scenario-based questions, ask: “Is this solution scalable, automated, and centralized?” If so, it’s likely the best choice.

Change Awareness Note:

AWS governance services evolve regularly. Always validate SCP behavior, Organizations features, and Well-Architected guidance against current AWS documentation. For the latest on each topic, see: