The Principle:

“When you leave your own country behind, and take your army across neighboring territory, you find yourself in a position of dependence on others. There you must watch for signs of strain.”— Sun Tzu

The Signs Before the Fall

Sun Tzu’s ninth chapter is about perception.

Here he shifts from action to awareness. It’s about how a commander reads fatigue, imbalance, and internal decay before they destroy an army from within.

This is not simply a lesson in combat, but more importantly, it’s a lesson in foresight. This is a crucial distinction that often separates a near-flawless victory from a crushing defeat.

Because every empire, every enterprise, every cyber defense effort eventually faces the same drift:

- expansion that outruns understanding

- momentum that hides exhaustion

- ambition that blinds leadership

- reach that exceeds resources

Armies break this way.

Companies implode this way.

Nations lose coherence this way.

In martial arts, this is the moment a fighter looks powerful, but their footwork is mis-aligned, the subtle tell of hand movement, the delayed return to guard, or the half-beat of hesitation that usually precedes success but this time leads to being hit.

Sun Tzu teaches us: if you can’t read the signs, you can’t survive the march.

Overreach: The Eternal Temptation

History loves proving this point.

Rome’s legions stretched from Britain to Mesopotamia until it could no longer feed its own frontiers. Britain built an empire “over all seas,” only to watch its overstretched supply lines rot from within.

The United States, victorious after World War II, constructed a global presence so vast that presence itself began replacing purpose.

Sun Tzu warned: The longer the march, the more fragile the army becomes.

Modern America has been marching for generations, militarily, economically, digitally, and each expansion has carried both pride and price.

Corporations experience the same decay. Cloud ecosystems suffer it even faster. What begins as strength, scale, reach, integration, becomes fragility when maintenance exceeds cost-tolerance.

In martial arts, overreach is the fighter who throws too many power shots, chasing a knockout rather than reading the opponent. They exhaust themselves long before the opponent is even breathing heavily.

Strength without pacing is just a longer route to collapse.

The Weight of Infinite Reach

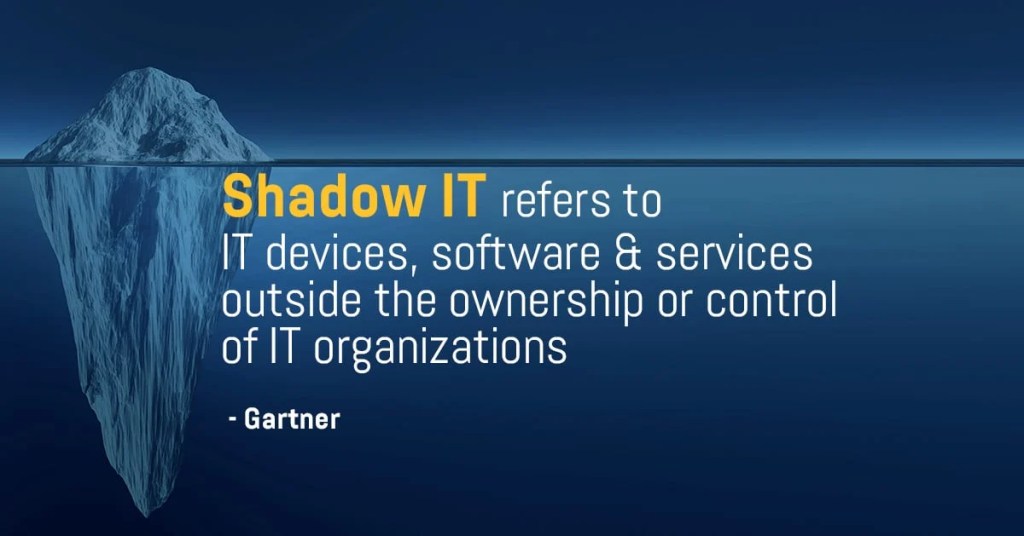

In cybersecurity, overreach becomes complexity collapse.

Each new department adopts a new tool. Each executive demands a new dashboard. Each vendor promises a universal cure.

Suddenly:

- no one sees the whole system

- logs pile up unread

- alerts become background noise

- integrations multiply into untraceable webs

- dependencies form faster than they can be understood

What once felt powerful becomes paralyzing.

Foreign policy suffers the same rhythm on a grander scale.

WWI.

WWII.

The Cold War.

Korea.

Vietnam.

Bosnia

Iraq.

Afghanistan.

Each began with a clean, confident objective. Most devolved into attrition, mission creep, and moral fatigue. It can confidently be argued that mission creep began with WWI, but that’s a conversation for another time.

Sun Tzu would summarize it simply: When the troops are weary and the purpose uncertain, the general has already lost.

In BJJ, this is the fighter who scrambles nonstop, burning energy on transitions without securing position. Sometimes, not even needing to scramble or change position, but hasn’t trained long enough to even know that.

In boxing, it’s the puncher throwing combinations without footwork. The fighter simply stands in place, wondering why his punches never land.

In Kali, it’s the practitioner who commits too aggressively, losing awareness of angles and openings.

The march becomes too long.

The lines become too thin.

And collapse becomes inevitable.

Business: The Corporate Empire Syndrome

Businesses suffer the same fate as empires.

Growth attracts attention. Attention fuels pressure to expand. Expansion becomes compulsive.

Suddenly, the company is chasing:

- ten markets

- ten products

- ten strategies

- ten “high-priority” initiatives

Each of these demanding its own “army.”

The parallels to national instability are perfect:

- Expansion without integration

- Strategy scaling faster than understanding.

- Leaders mistaking size for stability.

Eventually, the weight becomes unsustainable.

The company can no longer “feed the army.”

Costs rise.

Culture cracks.

Purpose fades.

What killed Rome wasn’t the final battle; it was the slow erosion of balance across its territory.

Most businesses die the same way, and so do most digital ecosystems.

In Wing Chun, this is the collapse of structure, the moment you can see a fighter trying to do too much, forgetting the centerline, being everywhere except where they need to be.

Overreach is always invisible until it isn’t.

The Modern March: Cyber Empires and Digital Fatigue

Our networks are the new empires.

Every integration is a border.

Every API is a supply line.

Every vendor is an ally whose failure becomes your crisis, and you can never plan for when that crisis comes.

Cloud architecture multiplied this exponentially.

Organizations now live everywhere and nowhere at once.

Sun Tzu’s image of an army dependent on supply lines maps perfectly to modern digital infrastructure:

- Multi-cloud systems

- SaaS sprawl

- CI/CD pipelines with invisible dependencies

- Third-party integrations with inherited vulnerabilities

When visibility fades, risk multiplies. When dependencies become opaque, consequences become catastrophic.

A company that cannot trace its supply chain of code is like an army that has lost its map.

One outage.

One breach.

One geopolitical tremor.

And the entire formation can buckle.

We call this “scalability.”

Sun Tzu would call it: Marching too far from home.

Reading the Dust Clouds

Sun Tzu taught his officers to read subtle signs:

- dust patterns revealing troop movement

- birds startled into flight

- soldiers’ voices around the fire

- the speed of camp construction

- the tone of marching feet

Modern versions of those signs are just as revealing:

- Escalating ‘critical’ alerts no one addresses

- Morale fading under constant pressure

- Defensive posture maintained through inertia

- Strategies repeated because they worked once, not because they work now

- Partners showing hesitation before they show defection

In WWI, the Lusitania offered one of the clearest “dust clouds” in modern history.

Germany declared unrestricted submarine warfare. British intelligence knew passenger liners were targets. The Lusitania was warned. The U.S. was warned. Even the ship’s cargo, which included munitions, made it a predictable target.

Yet the warnings were dismissed.

The signs were clear.

The perception failed.

And America’s reaction, too, was predictable; a “neutral nation” was pushed closer to war by a tragedy entirely foreseeable. Some might argue that certain American politicians sought to force the US into the war. Again, that’s a discussion for another time.

Sun Tzu’s maxim remains timeless: The first to lose perception always loses position.

The Cost of Endless Motion

Overextension rarely appears dramatic at first.

It looks like success:

- revenue rising

- troops advancing

- dashboards expanding

- integrations multiplying

Then the consequences arise:

- fatigue

- erosion

- misalignment

- burnout

- doubt

You begin fighting just to justify how far you’ve marched.

In cybersecurity, this is the company chasing every vulnerability without fixing their architecture.

In foreign policy, it’s the nation fighting endless “small wars” that collectively cost more than stability ever would.

In boxing, it’s the fighter who keeps moving forward until they walk into exhaustion, not a punch.

In Kali, it’s the flow practitioner who adds complexity until their movement becomes noise rather than intent.

Sun Tzu warned: An army that has marched a thousand li must rest before battle.

Modern systems rarely rest. We only measure uptime, not wisdom.

Restraint as Renewal

The answer isn’t retreat, it’s an informed, measured rhythm.

Knowing when to:

- advance

- consolidate

- recover

- regroup

- reconsider the terrain

Strategic restraint is not weakness. It is self-preservation.

Rome could have lasted longer by fortifying fewer borders. Corporations could thrive longer by protecting focus instead of chasing scale. Nations could endure longer by strengthening their homeland defenses before ever wasting a single dime projecting power abroad.

Sun Tzu’s art was never about conquest. It was about sustainability.

Victory without stability is just defeat on layaway.

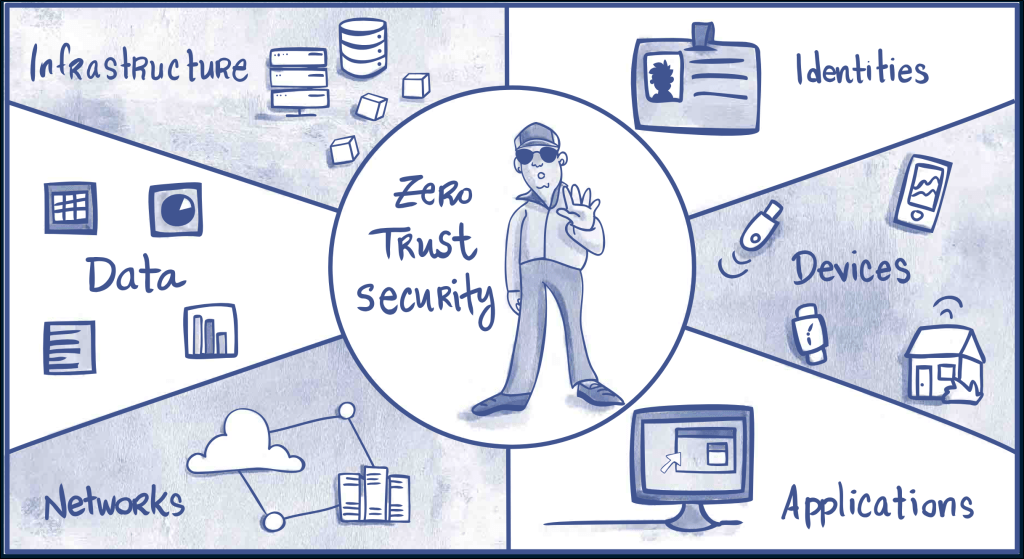

Awareness in Motion

Awareness is the antidote to overreach.

It requires honest measurement:

- what’s working

- what’s weakening

- what’s cracking

- what’s already lost

It requires humility: no army, business, or nation can move indefinitely without rest.

In cybersecurity, awareness is visibility.

In leadership, it’s listening.

In foreign policy, it’s simply remembering.

Awareness doesn’t stop momentum. It calibrates it.

It’s the half-beat between breaths that keeps the system alive.

Bridge to Chapter X | Terrain

Sun Tzu ends this chapter by looking outward again.

Once you’ve learned to read fatigue, imbalance, and decay within, the next step is to read the environment beyond.

The internal determines how you survive the external.

Which returns us to the opening principle: When you leave your own country behind…you find yourself in a position of dependence on others.

An army on the march teaches us to see ourselves. Chapter X Terrain teaches us to read the world:

- its obstacles

- its openings

- its deception

- its opportunities

- its traps

Awareness of self means little without awareness of landscape. That’s where the next battle begins.